AI for SWEs 72: Gemini 3 Pro, Claude Opus 4.5, and Olmo 3

Intelligence just got a whole lot smarter

Welcome to the nearly 1000 new subscribers to AI for SWEs in the past week (yes, we changed our name—more on that below!). We’re excited to have you and to explore building AI together.

This is our once-a-week AI roundup focusing on the current events, resources, releases, and more that are most important to software engineers. There are many AI roundups, but this one focuses specifically on what you need to know and learn to build better.

This roundup includes important headlines from the past week, what they mean, and why they’re important. I include my picks for must-read/must-watch resources from the past week. I then rapid fire off all the other important things that happened and finish with some career resources (coming soon to paid subs).

This week is a doozy. I’d love to know: What are your impressions of Gemini 3 Pro and Claude Opus 4.5? Benchmarks are one thing, actual use is another. Leave a comment below to share!

Always be (machine) learning,

Logan

Google Releases Gemini 3 Showing LLM Performance Isn’t Stopping

Google released Gemini 3 Pro and made it available in most Google AI products almost immediately. It topped all benchmarks including coding and multimodal reasoning. Initial usage reports it being very good for frontend development and about on par with other models for backend. Personally, I’ve found it much better at coding and reasoning all around but I’ve seen it struggle to remember fundamental information within conversations/tasks. My guess is this will be fixed over time.

Google also released Antigravity, which is the successor to Windsurf using the talent acquired during the Windsurf deal. Antigravity is an agent-first IDE that focuses on agent orchestration for planning, implementing, and debugging code directly in the developer workflow. You can watch this video to understand how it works.

Google also released Nano Banana Pro. While Gemini 3 Pro and Antigravity were both large releases, Nano Banana Pro absolutely blew my mind. It’s by far the best image generation model, but it can also generate charts and infographics with text incredibly accurately. Think about a prompt such as “Generate a good graphic to visualize this concept” and Nano Banana Pro can do it. It even supports interactive images, meaning users can generate graphics and learn by interacting with them. The Gemini app can also detect whether an image is generated by Google AI.

If you want to read more about Gemini 3 Pro, read this analysis by Simon Willisonand this article by Charlie Guo. It’s also worth checking out some of the more creative creations with Gemini 3 Pro on X here.

Anthropic Releases Opus 4.5 Right After ALSO Showing LLM Performance Isn’t Stopping

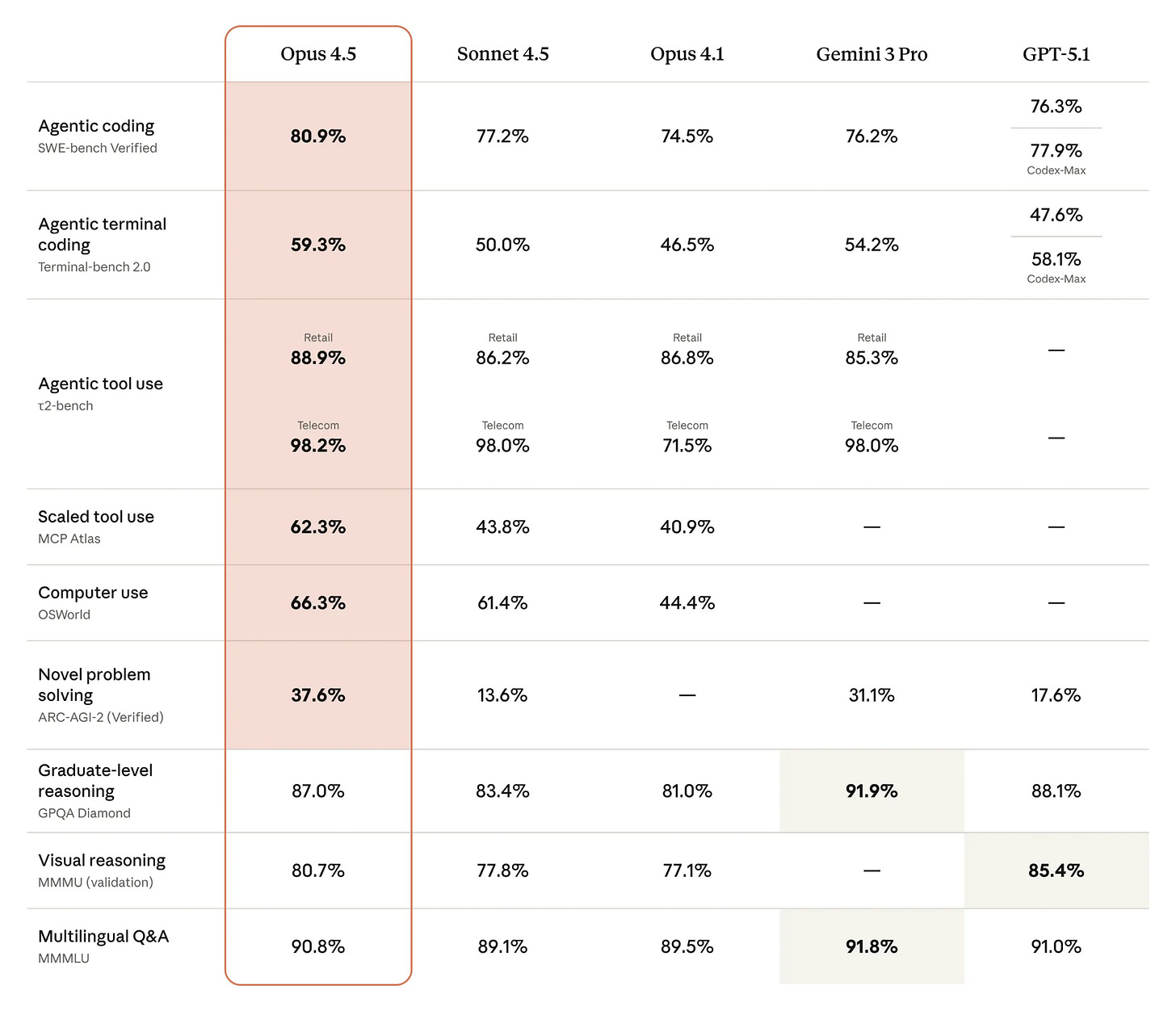

Anthropic released Claude Opus 4.5 right after the release of Gemini 3. This immediately took back Claude’s crown as the best coding model and for other engineering-related use cases (see graphic above). Opus 4.5 also introduces integrations with Chrome and Excel, targeting agentic workflows that require managing large codebases and documents.

Most importantly, Anthropic seems to have fixed the rate limits that were their customers’ biggest complaints. Whether Claude Code or the Claude app, the most difficult part of using Claude was rate and performance limiting. Opus 4.5 has much higher rates for users meaning many won’t have to be stingy with their Opus use. Opus 4.5 is also much less expensive via the API.

The Claude app will now auto-compact conversations so users don’t have to start a brand new conversation when their context window is full. This is huge because it’s likely what kept a lot of Anthropic fans from going all-in on Claude. It made most tasks within the Claude app infeasible.

ML for Software Engineers Has Changed to AI For Software Engineers

I did some testing with the name of this newsletter recently. Unsurprisingly, AI significantly outperformed ML in the title. It’s a much more recognizable term and much less intimidating. This newsletter will be AI for Software Engineers going forward, but the content will remain the same.

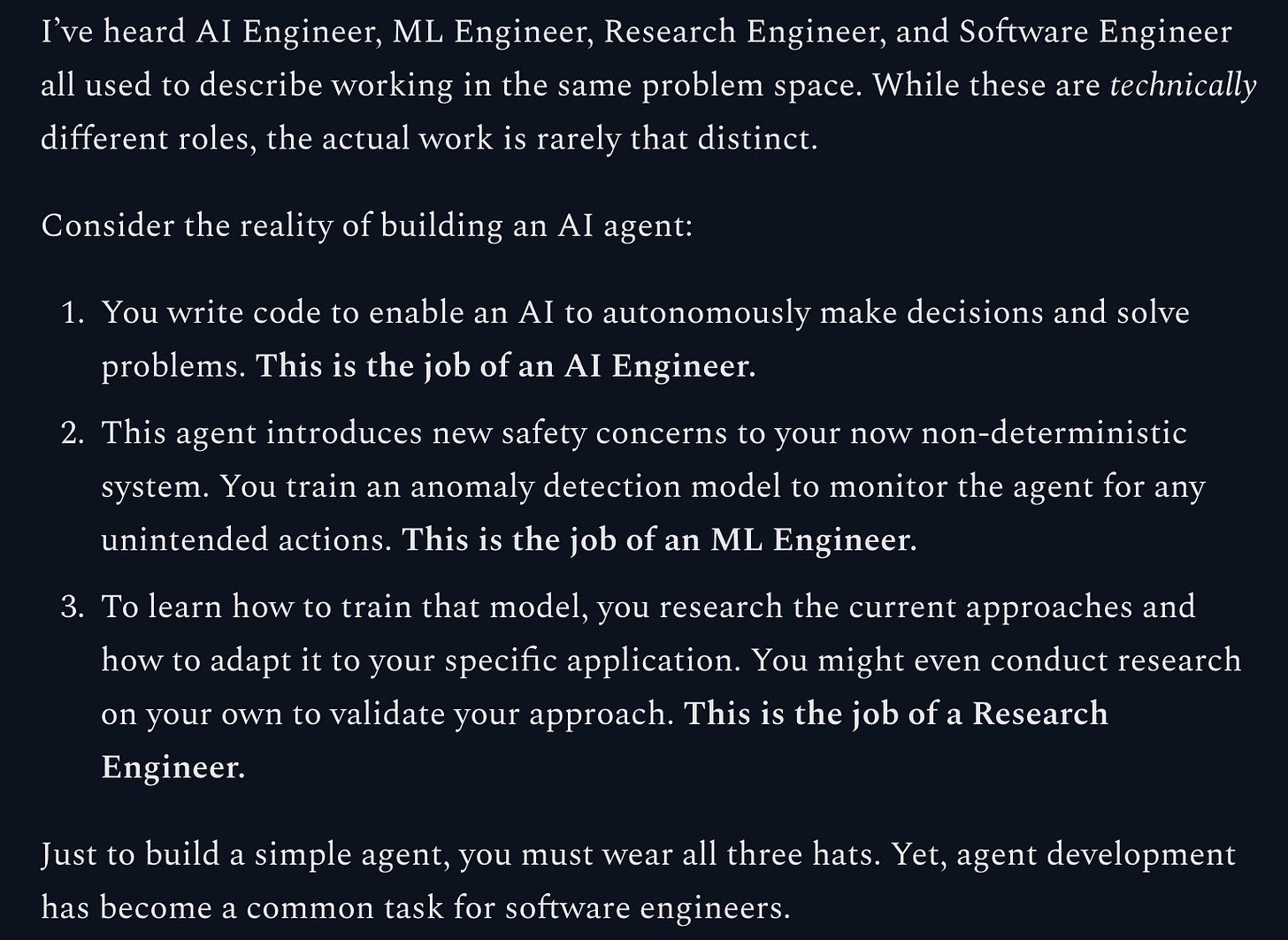

You might wonder: Why not call it something related to ‘ML Engineering’ or ‘AI Engineering’? Isn’t that what AI for Software Engineers really is? It’s a good question, but the answer is: not quite.

Tech loves to use buzzwords. ML engineer, AI engineer, research engineer, and software engineer are all used in different ways to try and delineate between job responsibilities. In reality, most job postings don’t fit into a single one of those roles and the work that engineers do in AI rarely does either. So, rather than focus on one of the above, I’m going to share bits of all three that are important if you want to work in AI as an engineer.

If you want a specific example of AI/ML/Research Engineer overlap, check out the updated About page for this newsletter (see the image above).

Google, AWS, and Meta Expand Infrastructure to Remain Big Players for the Long Term

AWS recently announced a $50 billion investment to build AI infrastructure specifically for the U.S. government, adding 1.3 gigawatts of capacity for federal missions. Google committed to 1,000x infrastructure growth over the next 5 years and is partnering with Westinghouse to deploy nuclear reactors for power. Meta is taking a novel approach to energy security by seeking federal approval to trade electricity directly, allowing them to secure long-term power for their data centers and resell excess capacity.

There’s also a huge monetary opportunity for software engineers working in AI infrastructure. Companies like Fluidstack and CoreWeave are only a few unicorn startups that have greatly increased their valuation in a short amount of time. There’s a huge demand in this space and many players will fill it. If you can work in AI infra or optimizing AI hardware, I advise you not to pass it up.

Ai2 Releases the US’s Only Truly Open Model

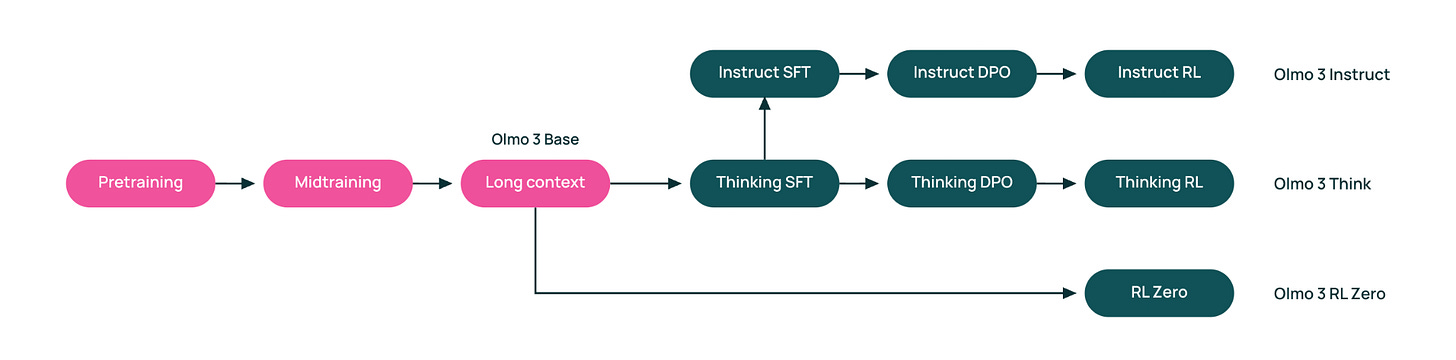

Allen AI has released Olmo 3, a massive milestone for open-source AI in the U.S. Unlike other open model releases that hide key model details, Olmo 3 includes the full training data, code, weights, and logs for its 7B and 32B models. It also competes at the frontier of open models, rivaling competitors like Qwen, Gemma, and more.

It’s important to understand that open source software gives you the code needed to understand how a piece of software works. For machine learning models to reach this same degree of openness, the training data along with how that data is used must be provided.

Read Nathan Lambert’s analysis for more on Olmo 3.

Waymo Expands Across the US

I wrote about how big of a deal Waymo is back in March of this year and everyone is catching on as Waymo expands across the US. Santa Clarita, San Diego, Minneapolis, Tampa, New Orleans, and Miami were all recently added to the list of Waymo-available cities.

Not only is Waymo an incredible feat of engineering, but it also has massive implications for improving the lives of everyday Americans. Autonomous vehicles have the potential to greatly decrease road injury and death, decrease transportation costs, save on time spent in traffic, and make transportation much more widely accessible.

More updates on Waymo expansions can be found here.

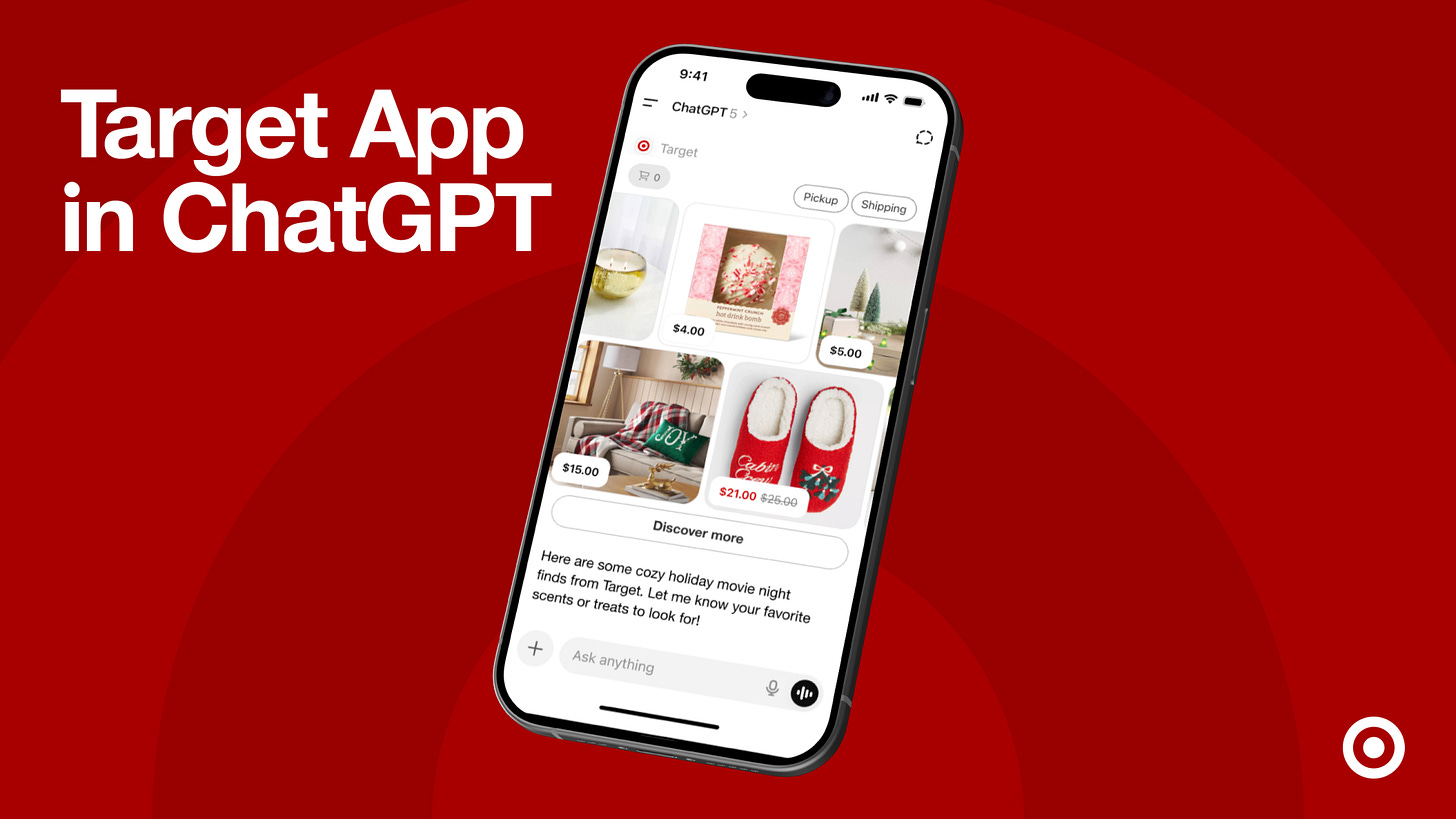

OpenAI partners with Target and Intuit

OpenAI has partnered with Target to bring ChatGPT to the Target app and integrate it with Target’s internal supply chain tools. OpenAI has also partnered with Intuit to power their financial products with automation. Both of these mark a long push OpenAI has been making to integrate ChatGPT with more products and collaborate with more enterprises especially within ecommerce.

OpenAI also released GPT-5.1-Codex-Max, a model designed for long-horizon agentic coding tasks that can persist context across sessions. The ChatGPT app has also been updated with a new shopping research feature and the global rollout of Group Chats. I haven’t actually tried the Group Chats feature out yet. If you have leave a comment below to let us know how it is and what you find useful.

Don’t miss last week’s AI for SWEs:

We discussed a brainrot IDE and why it’s such a terrible idea. We also touched on Yann LeCun’s bet and my hypothesis on local ML models saving you $100+ per month. You can check it out here: https://aiforswes.com/p/71

Logan’s Picks

Hugging Face CEO says we’re in an ‘LLM bubble,’ not an AI bubble: Clem Delangue argues we are in an “LLM bubble” that will likely burst next year, distinguishing this from the broader AI industry which remains robust. He predicts a market correction away from massive, one-size-fits-all models toward smaller, specialized models that are cheaper to train and easier to deploy on enterprise infrastructure. Hugging Face is betting on this shift, maintaining capital efficiency while competitors burn billions on compute.

Group Relative Policy Optimization (GRPO), by Cameron R. Wolfe, Ph.D.: GRPO is emerging as the preferred method for training reasoning models like DeepSeek-R1. By eliminating the need for a value function critic, GRPO significantly reduces compute and memory overhead compared to PPO. It enables large-scale reinforcement learning—often without supervised fine-tuning—allowing models to develop emergent reasoning behaviors through simple rule-based or neural reward signals.

AI Engineering in 2025: What It Really Takes to Reach Production, by Devansh: AI Engineering must shift from “building” to “gardening.” With 85% of AI projects failing to deliver value, the focus must move from treating LLMs as deterministic software components to managing them as evolving ecosystems prone to drift and decay. Success now depends on adopting rigorous systems disciplines—continuous monitoring, drift control, and lifecycle management—rather than just shipping code and moving on.

Model Quantization: Concepts, Methods, and Why It Matters: A deep technical guide on deploying large models on constrained hardware. It explains how reducing precision from FP32 to INT8 or FP16 shrinks model size and memory bandwidth usage without sacrificing accuracy. It covers essential techniques like post-training quantization and quantization-aware training, detailing how tools like NVIDIA’s TensorRT use calibration and per-channel scaling to optimize inference.

How evals drive the next chapter in AI for businesses: Evals are the only way to bridge the gap between vague business goals and performant AI products. This framework emphasizes creating “golden” datasets—outcomes mapped to specific inputs—to establish a rigorous error taxonomy. By implementing a continuous feedback loop where production logs are reviewed and fed back into the evaluation set, teams can create a compounding data advantage that outperforms simple A/B testing.

Rapid Fire

Dev Tools & Agents

Salesforce introduced Agentforce Observability to log and visualize agent reasoning steps / AWS launched Kiro, a CLI tool for building agents with property-based testing / Microsoft released Fara-7B, an efficient agentic model for computer use / Stack Overflow launched Stack Overflow Internal to feed clean data to enterprise AI agents / A new Workspace extension for Gemini CLI brings Google Docs and Calendar into the terminal / Amazon is using autonomous agents to automate red-team/blue-team cybersecurity testing / Agent design remains difficult, often requiring direct SDK use over leaky abstractions

Applications & Models

Alibaba’s Qwen AI app hit 10 million downloads in a week, outpacing early ChatGPT adoption / NTT deployed a lightweight LLM optimized for Japanese that runs on a single GPU / Google’s WeatherNext 2 forecasts weather 8x faster than prior methods using a single TPU (also here) / Meta’s WorldGen creates traversable 3D worlds from text prompts in minutes / Poe now supports group chats with up to 200 participants and multiple AI models / Google Search added agentic travel planning to build itineraries and check flight deals / Prof. Tom Yehreleased a hand-drawn calendar illustrating 24 AI architectures that I’m 100% asking for for Christmas

Research & Safety

Physical Intelligence trained π0.6, a VLA model that uses RL to learn from autonomous experience and corrections (also here) / Google proposed Nested Learning to give models long-term, associative memory / Amazon researchers introduced FiSCo, a pipeline to measure fairness in long-form LLM outputs / Andrej Karpathy argues that AI detectors are unreliable and assessment must shift to proctored exams / The new Humane Bench tests if chatbots prioritize user wellbeing over engagement / Military integration of AI is changing the rules of war, raising concerns about bias and oversight / DeepMind’s Nobel laureate discusses the future of AlphaFold and protein prediction

Upskill (Coming soon!)

Coming soon to this section will be weekly problems (ML, system design, and software-related) to help you improve your career along with information on who’s hiring, the direction the market is going, and the skills you should learn to level up your career.

Become a paid subscriber to AI for Software Engineers to get this in your inbox!

Great roundup! Really value how you highlight not just model performance but also the importance of workflows and prompt literacy for engineers, super practical and actionable.

I share the latest AI trends and insights. If you want to see which AI tools and models are genuinely advancing software engineering, check out my Substack. You’ll find it highly relevant.