What You Need to Know for 2026 | AI for Software Engineers 74

In-demand job skills, MCP goes to The Linux Foundation, OpenAI’s Code Red, and more

Hi Everyone!

Welcome to the weekly update edition of AI for Software Engineers! I go through everything software engineers should understand about AI by filtering noise and contextualizing what matters. I tend to focus on current events, tooling, research, and other interesting content.

This was an incredible week. In this edition, we discuss:

The AI industry’s shift toward practicality

The Linux Foundation taking over MCP

OpenAI’s Code Red and what that actually means

Developer tool updates

The in-demand skills for the 2026 software engineering job market

The learning resources to learn those skills

—

tl;dr:

It’ll be easier for software engineers to break into AI next year. If you want to do so, focus on developing a skillset in AI cybersecurity, building agents, and MLOps and specifically aim to understand agent workflows, evals, and protocols. Agents will still be a primary focus, but the complexity of building systems with them is much better understood.

MCP is now under the stewardship of The Linux Foundation to encourage open standards. OpenAI’s Code Red is about them aligning their priorities to reach positive revenue. Many developer tools have seen updates/releases. Companies are actually seeing a return on their agent development investments.

More detail on all this below and interesting opportunities.

Before we get into it, some housekeeping.

A few updates:

We’ve got a new logo! It’ll be representing the newsletter and will be seen around more. It might even be on some swag soon…

As always, I’m ironing out the format of these weekly updates to be more beneficial for both me when doing my research and you when reading. I’m trying to make it a bit more interactive. You can now leave comments to help drive the direction of the newsletter. I’m also trying to find resources for you to learn everything the skills I write about.

I’m working on a way to get readers more involved in the newsletter. Don’t forget that we’ve got an ML roadmap to help anyone learn ML fundamentals and an AI for SWEs repo to get hands-on with building AI-related products. I’m looking to make these resources more community-oriented soon.

Partner with AI for Software Engineers!

If you want to support AI for Software Engineers and get viewed by 11,000+ developers each week, reach out to sponsor an issue. I’m particularly interested in excellent learning resources, developer tools, and career opportunities.

What’s Been on My Mind

This past week has seen a shift in the AI industry toward practicality. Recently, we’ve seen influential voices mention that the economic impact of AI hasn’t been living up to the hype. Most notably, we’ve seen Andrej Karpathy and Ilya Sutskever mention this during their most recent appearances on the Dwarkesh podcast.

I’ve been thinking about this a lot for two reasons:

The actual statistics about the job market don’t match what I’m noticing about everyday work.

I’ve been working on AI integration into developer workflows at work with world-class engineers and it’s much more complicated and cutting edge than we had anticipated.

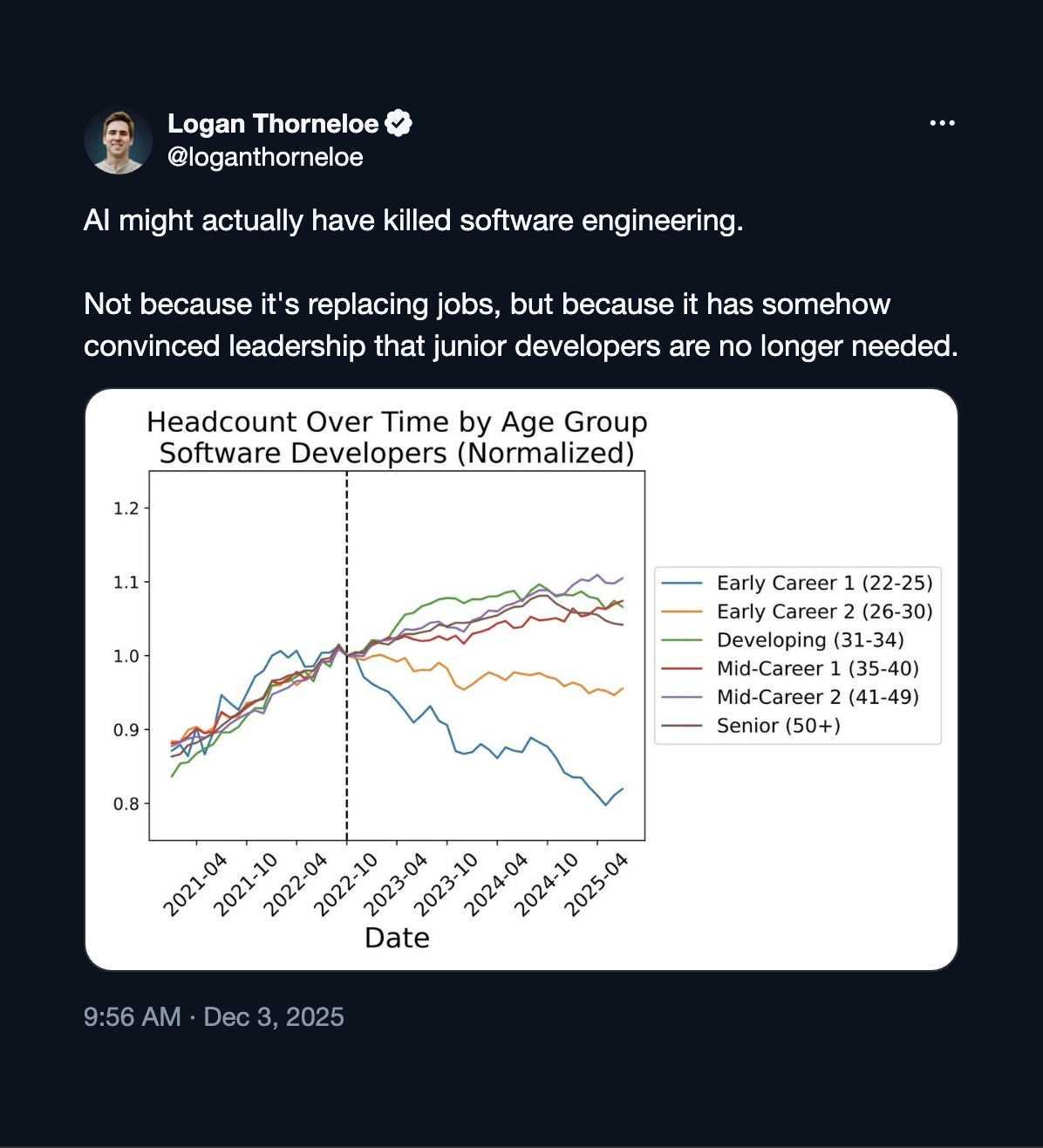

First, a recent study reported a severe decline in job listings for junior developers. Almost frighteningly so—to the point that the industry will be heavily impacted in the coming years as we don’t have enough junior engineers to fill the demand we need.

Everyone said AI would kill software engineering, but it turns out this has very little to do with it actually taking jobs and more to do with AI hype convincing leadership that it can.

From my research and daily work, I would expect the number of junior developer positions to have increased. AI makes junior developers much more capable when given to them at a company with a good engineering culture (I’ll include more on this in a separate article next week. There’s actually an entire study to prove this is the case and it’s super interesting).

Honestly, It’s kind of a cheat code for companies to hire junior engineers in a market like this. Companies that get their pick of the most talented engineers for less. We’ve also never had so many tools to increase onboarding velocity and enable developers to build more.

What my team at Google is seeing are tons of opportunities to apply AI to developer and machine learning workflows and speedups, but applying these properly is much more complicated than one might think. A lot of thought needs to go into security and ensuring system performance. AI evals are much more difficult than regular test suites.

In 2026, we’ll see more applications of AI explored and productionization of agents mature. It’ll be even easier for software engineers to get involved with AI as companies realize the useful applications of AI and the headcount required to achieve it.

If you want to get into AI as a software engineer in 2026, these are the top three skills I’d focus on:

Building agents. Agents will continue to build in 2026 and companies will narrow on their most impactful applications. These applications will far outnumber the supply of developers able to build them.

MLOps. This has been an incredibly valuable skill for about a decade now and will only get more valuable in the coming years. More companies using AI means more models are being trained. Companies will need engineers that understand that training process and can build the infra necessary to make it happen.

AI Cybersecurity. You wouldn’t believe the security and privacy complexities non-deterministic systems introduce. This is another article I’ve got in the works and something we’ve been deeply exploring at Google. If you can understand this, there will be opportunities available.

Links to learn each are included in the ‘Learning Resources’ section at the bottom.

The last edition of AI for Software Engineers

In case you missed the last AI for SWEs, here it is. There’s more on agents, Ilya’s podcast appearance, and the importance of AI security there.

Things you should know about

Software engineering is going agentic

We already know that nearly 90% of organizations are using AI to code, but now agents are making their way into enterprises. 57% of organizations are deploying agents for multi-stage workflows with 16% of those being cross-team workflows. In 2026, 81% of teams plan to use agents with 39% of those agents being developed for multi-step workflows.

Interestingly, 80% of organizations are reporting a return on their investment. As we’ve mentioned previously, this is a number that is very difficult to quantify. What does ROI actually mean in multi-step agentic workflows? It greatly depends on the workflow and the goals it aims to achieve. There isn’t a universal standard for quantifying this uptick in velocity.

The use of agents and AI is extending beyond traditional software engineering tasks (code planning, generation, document, review, etc.—where they’re seeing a 59% increase in productivity) to tasks like data analysis and report generation where they’re seeing similar gains.

I highly recommend reading Anthropic’s 2026 State of AI Agents Report, even if you only read the foreword. If you want me to go more in-depth into this so we can really get into how enterprises are using AI and the ROI they’re achieving, comment at the bottom of this article.

OpenAI declares a ‘Code Red’

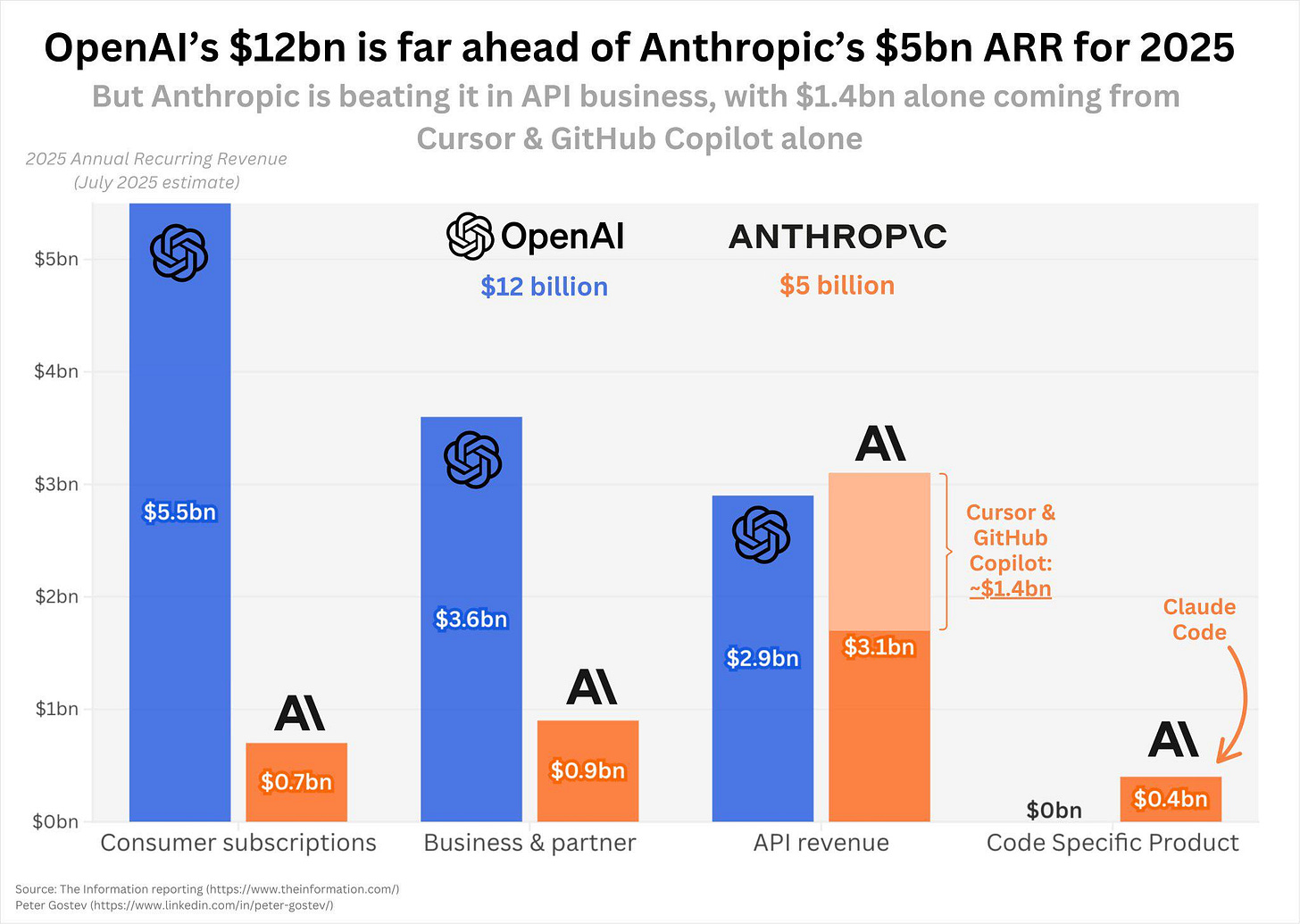

OpenAI declared a Code Red internally as competitors have started stealing market share. Most notably, Google is stealing is gaining both consumer and enterprise market share in AI tools and Anthropic has increased their revenue considerably to the point where they’re considering IPO’ing in 2026.

I believe this is being largely overblown by the public. OpenAI has revolutionized the consumer understanding of LLM products and continue to lead in that area. Over the past three years, they’ve been competing with (and beating) a company of Google’s size as a startup. In reality, Google’s resources far outnumber OpenAI’s own. What we’re seeing is OpenAI figuring out the focus of their product offerings to become revenue positive.

Anthropic is a great example of why this is important. Six months to a year ago, many individuals counted Anthropic out of the AI race because of their size and less prominent standing. In reality, they’re almost revenue positive and are consistently beating larger companies in their areas of focus, the most notable of which is coding.

We’re seeing OpenAI figure out the same right now as they continue to push their product offerings forward.

The Linux Foundation now owns Model Context Protocol (MCP)

The Linux Foundation is a non-profit organization that has been dedicated to fostering the growth of open-source software for decades. Some examples of software they steward are The Linux Kernel, Kubernetes, Node.js, and PyTorch. They have a history of maintaining vendor neutrality and facilitating open collaboration.

The Agent AI Foundation (AAIF) is a directed fund under The Linux Foundation. It’s a joint partnership between major players in AI including OpenAI, Google, and Anthropic. The goal of the AAIF is to maintain agentic AI transparency and collaboration to ensure the technology benefits everyone and open standards continue to be developed and maintained.

MCP will join the AAIF as a founding project to bring AI open standards under one roof. This is great news for all of us as open standards make it much easier to actually build and apply new technologies.

Developer tools continue to grow

So many developer tools are being developed and updated each week. Here are some notable developments in a rapid fire format:

You can now build applications with Gemini Deep Research Agent integration. / Google leases the FACTS Benchmark Suite, a benchmark for testing a model’s accuracy and groundedness. / llama.cpp server now includes a router mode that lets you dynamically load, unload, and switch between models without restarting by running each model in its own process. / ChatGPT is adopting skills / Gemini CLI introduces session management. / Thinking Machines has released Tinker to everyone. / Mistral releases Mistral Vibe, their AI CLI coding tool.

My picks for the week

These are the videos and articles from this past week I think are most worth watching/reading outright. I highly recommend you don’t miss them:

TPU Mania by Babbage: Google’s recent decision to sell its TPUs externally and the speed of the TPU v5p (2.8X faster than v4) have created a major “vibe-shift” in the industry, setting up the most keenly fought architectural contest since CISC vs. RISC in the 1980s.

Researchers Built a Tiny Economy. AIs Broke It Immediately [Video]: In the SimWorld delivery economy, AI agents high in “openness to experience” became “shopaholics,” kept buying unused scooters, and went broke, while conscientious agents were the “boring winners” that achieved high profits by focusing strictly on the task at hand.

How to use Claude Code for Maximum Impact by Devansh: Enterprise adoption of Claude Code, demonstrated at companies like Doctolib, drastically cuts engineering time by allowing engineers to replace legacy testing infrastructure in hours instead of weeks, helping them ship features 40% faster.

Top 5 AI Model Optimization Techniques for Faster, Smarter Inference: Discusses optimization techniques like Quantization-Aware Training (QAT) and Pruning plus knowledge distillation, which make models cheaper, smaller, and more memory efficient to operate in production.

Olmo 3 and the Open LLM Renaissance by Cameron R. Wolfe, Ph.D.: The Olmo 3 family of models (7B and 32B) is unique in that it is “fully open,” releasing model checkpoints, all training data, and training code, making it an unprecedented and comprehensive starting point for open LLM research.

The state of the market

CEOs are still betting huge on AI in 2026. As mentioned above, there’s a huge demand for developers that can build agentic AI systems. This means taking a problem, prototyping an agentic solution as needed, and building the entire system. This means understanding complex, multi-step workflows and the work that go into ensuring these systems are productionized.

To learn this, I’d focus on understanding (resources for learning each at the bottom of this article):

Evals. These are like tests for LLMs and agentic systems. All software engineers know that testing gets much more complex when systems are non-deterministic and that’s what makes evals so complicated.

Protocols. If you haven’t spin up an MCP server so your favorite CLI tool can access a resource you need it to. MCP servers are huge for integration into agentic workflows and the best way to learn them is by building one.

Agentic workflow patterns. There are certain patterns to building agents that are followed for specific use cases. I’ve linked a guide in the ‘Learning Resources’ section.

If you want any of these skills to be added to the AI for SWEs hands-on learning repo, comment which you’d like to see at the bottom of this article.

Interesting opportunities

If your company is hiring, you can reach over 11,000+ developers by including it in this newsletter. If you’re interested, reach out to me.

Google Ads is aggressively hiring top talent

If you’re interested in working at Google Ads and you have experience with large-scale distributed systems, working in Ads, working in ML/AI, or solving complex problems at scale, please reach out! Languages of particular interest are C++, Go, and Python, but those are not a limiting factor. You can DM me here, on X, on LinkedIn, or hit up my email.

Please make sure to include information about yourself and why you’re a good fit in the DM/email. I will not respond to just ‘Hello’ (see aka.ms/nohello).

Anthropic is hiring eval talent and accepting applications for their Anthropic Fellows Program

If you aren’t on X, I’d highly recommend lurking there. If you hate the algorithm, let me know and I can help you out. Companies are aggressively seeking applicants on X and I’m guessing this is due to AI-related problems on LinkedIn.

Anthropic is looking for talent to build the next generation of evals and eval infra. They are also taking applications for their Anthropic Fellows Program which is a full-time research commitment with mentorship from Anthropic researchers. It has about a 40% chance of a full-time offer after completion if your work is excellent. Definitely check it out.

Thinking Machines is looking for many research engineers to fill ML infra positions

Thinking Machines has multiple ML infra-related research engineer positions. They’re especially cool because they’re a cross between research and engineering (meaning you’re building at the cutting edge of AI) but they also seem to be highly user-centric.

Google is hiring student researchers

Google is hiring student researchers for 2026 to work at the cutting edge of AI. If you’re into multi-agent AI systems, RAG, prompt optimization, or self-improving agents, please apply! Again, this is another job opportunity they’re sourcing through X. If you’re not on X, join and message me!

I’ll be adding more in the opportunities section as I come up with a better way to organize and keep track of all of them.

Learning resources

Reinforcement Learning: Stanford’s Deep Reinforcement Learning lectures on YouTube are world class lectures accessible entirely for free.

Agentic Workflow Patterns: ByteByteGo newsletter recently released an article detailing these patterns at a high-level. Definitely something to be familiar with.

MLOps: I recommend checking out the MLOps community and the resources they have available. You can also find them on Substack: MLOps.

AI Cybersecurity: I don’t have a good resource for this yet. If you do let me know! Tagging Prashant Kulkarni in case he has a resource for this.

Building Agents: I’ve heard good things about DeepLearning.ai’s course on building AI agents. Check it out.

Agent Evals: Same with evals, also check out DeepLearning.ai. They’ve got a short course to get started on agent evals. I’ll continue looking for something more in-depth.

Agent Protocols: I recommend HuggingFace’s MCP Course to get started. Still looking for resources on other protocols.

Thanks for reading!

Always be (machine) learning,

Logan

“OpenAI declared a Code Red internally as competitors have started stealing market share. Most notably, Google is stealing is gaining both consumer and enterprise market share in AI tools and Anthropic has increased their revenue considerably to the point where they’re considering IPO’ing in 2026.”

doesn’t look the best right before you IPO if your competitors are already taking bites out of you. google caught up faster than they thought.

Absolutely, this is a must-read for software engineers. The focus on agentic workflows, evals, and protocols highlights where real AI skill will pay off in 2026. It’s not just about using AI, it’s about building systems that reliably work at scale.

I talk about the latest AI trends and insights. If you’re interested in practical strategies for software engineers looking to master AI agents, MLOps, and agentic workflows, check out my Substack. I’m sure you’ll find it very relevant and relatable.