What are World Models? | AI for Software Engineers 75

Highlights, current events, industry trends, and career resources for 12/23/2025

Yann LeCun has confirmed his startup, Advanced Machine Intelligence (AMI), will develop world models and is currently seeking fundraising at a $5B valuation. While headlines focus on the $5B valuation, I care much more about the work.

Crazy valuations aren’t uncommon in the world of AI. The potential for this technology is astronomical even if the roadmap to get there is still being discovered. I view AI’s potential as shifting humanity’s problem-solving from O(n) (or greater!) complexity potentially to O(1). Once we can solve problems quickly, our rate of advancement will compound and discovery will take off. If this happens, AI will be worth far more than even crazy valuations place it at.

LeCun is now directly pursuing the same area of research and industry as Fei-Fei Li’s World Labs. He also joins other great minds in AI who have said AI needs a breakthrough beyond scaling the research we currently have. Many are placing a bet on this being world models.

Put succinctly, world models aim to understand the 3D world and learn more like a human child instead of like a machine. Instead of understanding a statistical correlation between inputs to generate a representative output, world models seek to understand causal physics and spatial reasoning. World Labs puts it well on their website:

“We build foundational world models that can perceive, generate, reason, and interact with the 3D world — unlocking AI’s full potential through spatial intelligence by transforming seeing into doing, perceiving into reasoning, and imagining into creating.”

This means world models aren’t generative but instead make predictions based on abstract representations of the concepts they’ve learned. Instead of guessing at pixels, they focus on higher-level concepts.

Here’s an example to illustrate what this means: If we’re considering a car moving down a street, a generative model wastes compute estimating pixel movement for every leaf on the road. A world model would instead ignore unimportant details and focus on the latent variables that impact its understanding such as the car’s velocity or the friction between the car and the road. Those details would be used to predict the world’s next state.

Practically, this means two things:

World models don’t waste resources on things that are unimportant for a task.

We can use spatial intelligence to train other real-world AI applications without needing to collect new data. Instead, world models can generate (or “dream”) their own. This is both very efficient and safer than the alternative (think about an application like self-driving where there is always inherent risk with data collection).

I predict we’ll be seeing a lot more about world models in 2026 and I’m curious to see how far we’ll get. Enjoy this week’s resources!

This week’s curated highlights

My LLM coding workflow going into 2026: This is an excellent comprehensive overview of Addy’s AI coding workflow. The best way to optimize any AI-related workflow is to discuss and share what works with others. I highly recommend checking this out and sharing your workflows with others!

Gemini Plays Pokemon: This report compares the performance of Gemini 2.5 Pro to Gemini 3 Pro playing Pokemon. It’s an interesting read. Gemini 3 Pro didn’t just play better; it exhibited creative problem-solving by finding a loophole to multitask.

Andrej Karpathy revealed his 2025 LLM Year in Review: I recommend just reading this, but most interesting is a shift from models imitating humans to reasoning through rewards. Other interesting notes are image model advancements, terminal-based AI, “jagged intelligence”, new layers of LLM apps, and the introduction of vibe coding.

Jeff Dean’s Performance Hints: Jeff Dean updated his guide on engineering principles for performance at scale. The writeup provides a guide to optimizing software performance at the level of a single binary. Performance is a crucial topic for any engineer to understand and is only getting more important in the age of AI.

Sam Rose’s overview of LLMs/prompt caching: This article gives a great overview of how prompt caching reduces token costs in LLM and it also gives a great high-level overview of LLMs as well using excellent visual elements. I love articles with great visuals and Sam constantly delivers.

Your guide to local coding models: This is my article from this past week that many of you have likely already read. I’m including it here because I made some mistakes in the initial release that I edited to clarify and it incited some interesting conversation across X, LinkedIn, Substack, and even Hacker News where it reached number 1.

Things you should know

New & Trends

Google released Gemini 3 Flash, outperforming its previous Pro models

Google released Gemini 3 Flash, the new generation of its smaller model for faster, cheaper applications. It is multimodal, uses 30% fewer tokens than previous models, and is significantly cheaper ($0.50/1M input tokens). The advancements of smaller, cheaper models are much more important than the advancements of large models when it comes to applications and are key to democratizing the technology.

AI coding assistants ship more, but also more bugs

CodeRabbit’s recent report shows that AI introduces 1.7x more issues than human-written code. Specifically, AI introduces 1.7x more issues, including 75% more logic errors and 3x the readability issues. AI can produce more code faster, but the code tends to be significantly worse. This volume of pull requests also causes reviewer fatigue making it more likely for errors to reach production.

New York governor Kathy Hochul signed RAISE act

Despite an executive order from President Trump to consolidate AI regulation to the federal level, New York governor Kathy Hochul signed the RAISE Act to establish safety standards for large AI companies in New York. This act requires companies with over $500 million in revenue to comply with specific safety standards.

AI data centers have a carbon footprint that matches a small European country

A new study shows that AI systems could produce roughly the same amount of carbon dioxide as New York City or Norway (about 80 million tons). While this is an estimate (as exact numbers are hard to come by), it shows the environmental impact AI could have and emphasizes that the real long-term problem AI needs to solve is power and energy.

AI has entered the game industry

Roblox Studio is integrating AI into their platform. This enables users to generate assets with a prompt, use AI for real-time voice translation, and orchestrate work across other creative tools via MCP. The goal of this shift is to enable users to get to market faster and be more profitable.

Contrarily, Larian Studios (the creators of Baldur’s Gate 3) CEO Swen Vincke explained Larian only uses of AI in game development for content exploration similar to how they use Google images and art books. This was after they were accused of trying to use AI to replace artists which Vincke explained was false and they’re actually looking to hire more artists, not replace them.

The video game industry has many potential applications for AI, but gamers aren’t excited about how they’ll impact the actual games produced. In 2026, I’m certain we will see it used more as it becomes more cost effective and I’m curious to see what the usage will be.

Research

Duke scientists created an AI that simplifies complex data

Researchers have created a physics-bound deep learning model that takes in complex data and outputs a much simpler, mathematical representation of that data. This is particularly useful in domains with a ton of data but without equations to explain relationships within that data. This AI creates a starting point for a formulaic representation of that data.

OpenAI research on agents and their capabilities

Just a week after discussing OpenAI’s Code Red, they’re pumping out research at an astonishing rate. They’ve released research on chain-of-thought monitorability, AI’s capability to accelerate biological research in the wet lab, and AI’s ability to perform scientific research tasks. I’m particularly interested in the first (and you should be too if you’re building any sort of AI-powered application) and I’m loving the trend of applying AI to accelerate research.

Product & Releases

Google Labs released CC, an AI agent connected to Gmail, Drive, and Google Calendar to deliver a personalized briefing of your day.

Anthropic released Agent Skills as an open standard so other companies can get on board with integrating them within their products. Anthropic’s push to release AI standards has gone a long way to solidify their standing in enterprise AI.

OpenAI released GPT-5.2-Codex, a version of GPT-5.2 for coding that achieves state-of-the-art on SWE-Bench Pro and Terminal-Bench 2.

ChatGPT and Codex CLI are also adopting skills similar to Claude.

Google introduced FunctionGemma, a small model designed for function calling on edge devices.

Safety

OpenAI is upping their safety game by adding under-18 principles to their model spec for users aged 13 to 18. OpenAI has also created a guide for families and parents for responsible AI use.

Career resources

State of the market

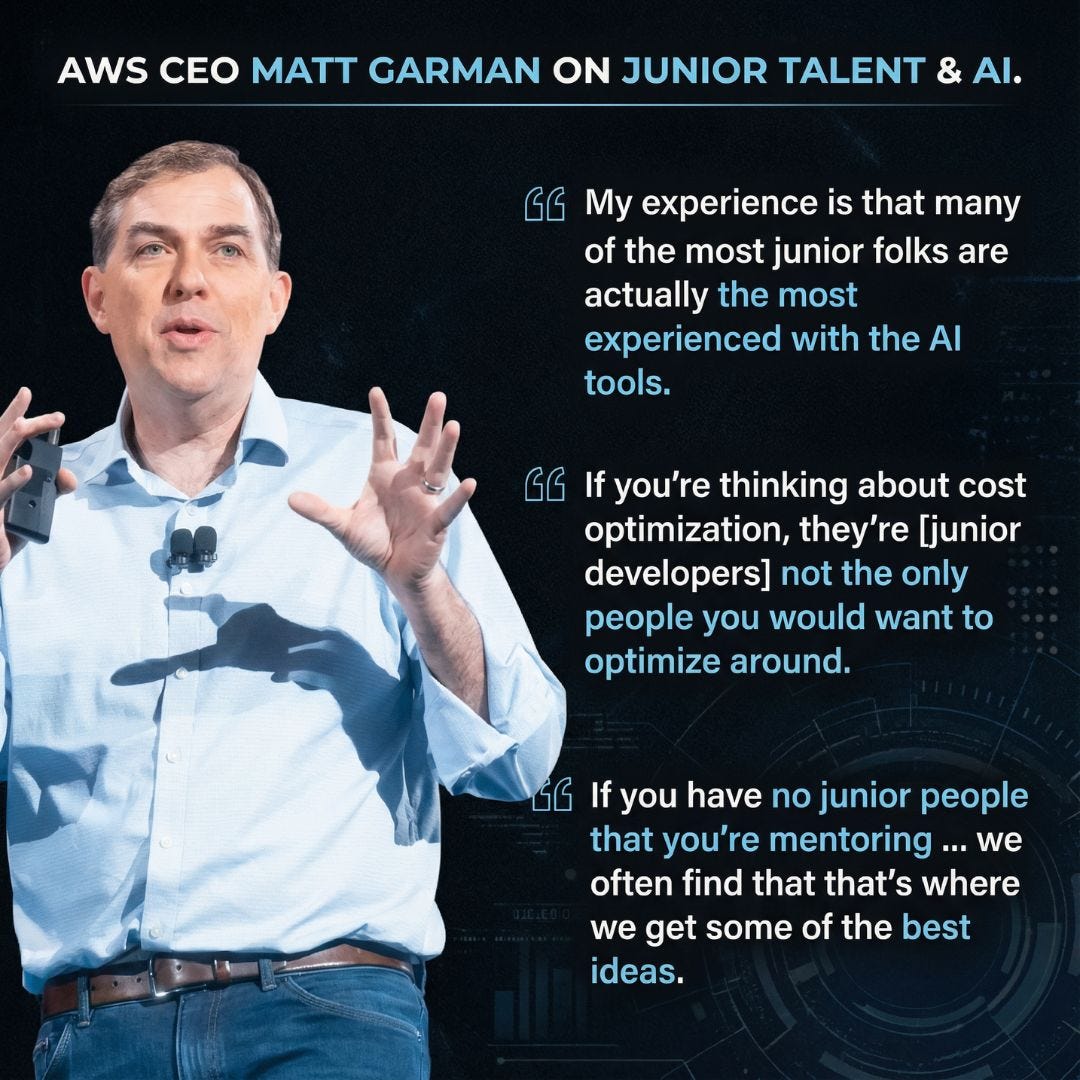

AWS CEO says replacing junior engineers with AI is foolish

Amazon has a ruthless history of optimizing for cost, even at the detriment of its employees. When the CEO of AWS states replacing junior engineers is a bad idea, you know it’s true. The software engineering industry will be in a bad place when we need more senior engineers but we’ve abandoned the junior engineers that should become them.

Not everyone is convinced by AI coding

This is an interesting article I actually helped contribute to that highlights the gap in expectation versus reality for AI in software engineering. The expectation is set very high that engineers should be able to greatly increase their productivity by using AI coding tools. In reality, it takes time for developers to figure out these tools and how to use them productively. In fact, the initial onboarding can even cause a development velocity hit that isn’t reflected in performance expectations.

Interesting opportunities

Google Ads is still hiring! There’s an aggressive push to hire top talent in Google Ads. We’re specifically looking for mid- to senior-level developers with experience in Python, C++, Go, or Java that have worked on large-scale distributed systems. GenAI, ML, and ads experience is a plus. If you’re interested, check out the open roles here and/or DM me with any questions.

Learning Resources

Packt has a deal for $10 for any technical ebook. This goes down to as low as $5.99 if you buy 10 or more. This is the best deal I’ve seen yet to build a technical book library.

This comprehensive open-source roadmap will walk you from LLM fundamentals to deploying advanced LLMs. It structures the learning path into 3 distinct tracks: LLM Fundamentals, the LLM scientist, and the LLM engineer.

Check out an Agentic AI problem set developed by @Prof. Tom Yeh. Professor Yeh writes AI by Hand, an excellent resource for understanding the inner workings of machine learning models. This resource is great to test your knowledge of AI agents and upskill while you’re at it.

If you missed last week’s article, you can check it out here:

Thanks for reading!

Always be (machine) learning,

Logan

Funny how some ideas start showing up publicly right when you’re already living inside them.