AI's Role in Maduro's Capture | AI for Software Engineers 76

Plus: Half of AI code has security flaws, how to fix AI in education, Nvidia acquihires Groq, AI safety concerns grow, and more

Here are my picks for content you don’t want miss and everything you should know about AI for January 7, 2026. Enjoy!

My Picks

21 lessons from 21 years at Google by Addy Osmani: Lessons learned from working at Google for 21 years. Two notable lessons: most slow teams are actually misaligned, and the best engineers are obsessed with solving user problems. All are worth reading.

Reasoning models are a dead end by Devansh: A valuable take on reasoning models and their lack of interpretability. Reasoning encoded into model weights loses 95% of intermediate branching and produces brittle behavior compared to externalized reasoning infrastructure. A great example of why engineering is so important in AI.

The suck is why we’re here: Some great perspective on writing with AI. The author argues that AI shortcuts the crucial, difficult parts of writing (research, stuck thinking), and that avoiding these “sucky” parts sacrifices depth and lasting reward. AI will increase quantity but lower average quality, making genuine effort stand out.

Advent of Code 2025 with Compute Shaders by Matthew Carlson: An excellent exploration of implementing Advent of Code solutions using GPU compute shaders on Metal. The GPU kept consistent times (~5ms) as problem size grew while CPUs slowed dramatically, demonstrating practical applications for massively parallel problem solving.

Building AI Agents, Open Code And Open Source by Madison Kanna: I thoroughly enjoyed reading this interview, especially the parts about open versus closed source tools and the motivation behind them. Terminal agents are only going to be more important this year and this does a great job of helping readers understand them.

Things you should know

AI was used to push narratives in Nicolás Maduro’s capture

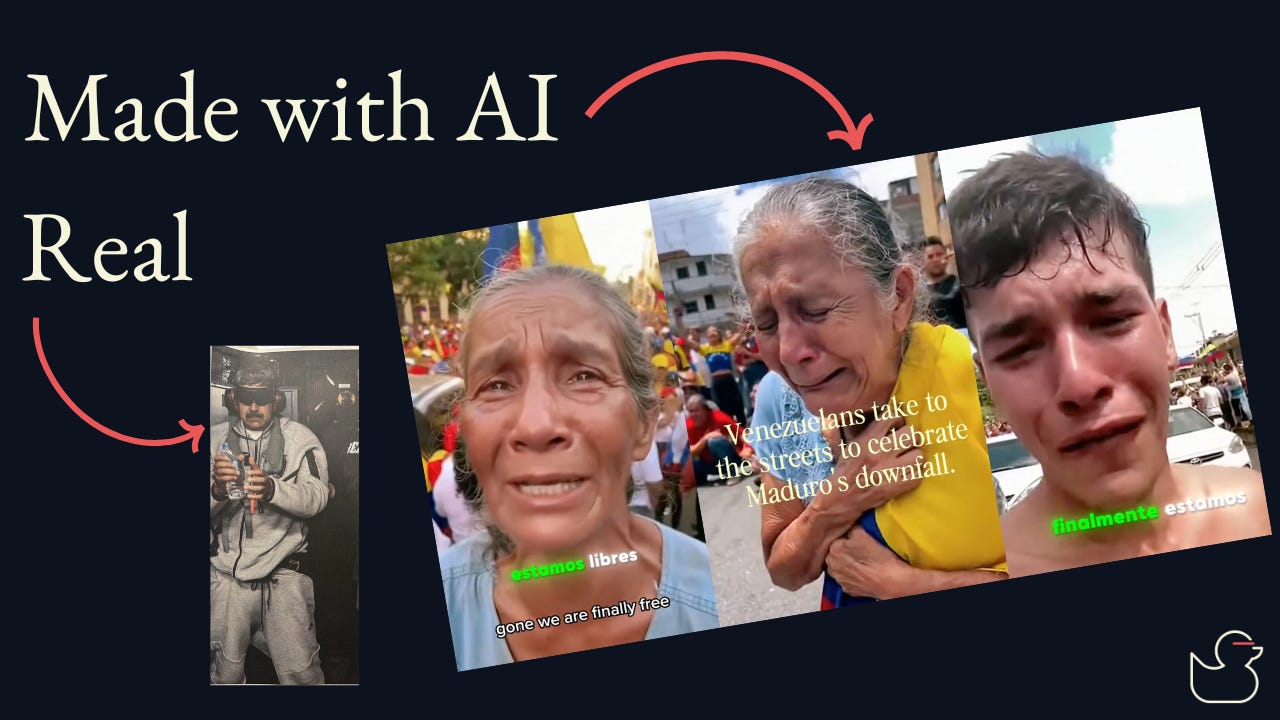

AI-generated media circulated the internet following the US capture of Venezuela’s president Nicolás Maduro. Fake images depicted the capture itself, while a deepfake video showed Venezuelans crying tears of joy. Both were used to push specific narratives about the operation.

Any company serious about AI needs to help viewers discern between AI and non-AI media. The images above were caught by Google’s SynthID watermark which Google attaches to all AI-generated images using Gemini. Sure, anyone can switch to a non-watermarking tool, but even putting up a small obstacle to generating a fake narrative is a big win.

Source: EBU Spotlight on Maduro fake images, Yahoo News on fake celebration video, Google SynthID

See how SynthID works below:

AI safety concerns mount as AI chatbots face serious scrutiny

xAI was fined 120 million euros under the Digital Services Act due to Grok generating sexually explicit images of women and children. Separately, a lawsuit alleges OpenAI is withholding ChatGPT logs after a murder-suicide where transcripts show the chatbot validated a user’s paranoid delusions.

AI safety is foundational to ensuring we can apply AI to the applications where it’s needed. It’s crazy to me that AI safety teams were previously understaffed or dismissed. Both of the examples above show why AI safety is important and also some of the difficulties that come with ensuring safety.

Source: TVP World on Grok, Ars Technica on ChatGPT logs

Half of AI-generated code has security flaws

Over 30% of senior developers now ship mostly AI-generated code, and the trade-offs are becoming clear. AI code shows logic errors at 1.75x the human rate, XSS vulnerabilities at 2.74x, and roughly 45% of it has security flaws. PR sizes are up 18%, incidents per PR are up 24%, and change-failure rates have risen 30%. Properly configured AI review tooling catches 70-95% of low-hanging bugs.

These statistics echo my recent article detailing how AI impacts an organization’s engineering culture. AI is an amplifier, and if your processes aren’t solid, AI will make them worse.

Source: Addy Osmani, AI for Software Engineers

The best way to fight AI cheating in education is with AI

An NYU professor is using AI to conduct oral exams with students at just 42 cents per student. The AI asks follow-up questions and probes understanding in real-time, forcing students to verbally explain concepts rather than paste in AI-generated answers. This follows a trend where some schools have removed online math courses entirely or now require in-person testing as instructors note declining problem-solving skills and increased reliance on copying AI outputs.

One of my biggest concerns with AI is education. It has potential to be the greatest multiplier but also the worst detriment in this space. As with many other applications, we’re seeing AI-related problems being combatted with AI-related solutions.

Source: Reddit discussion on AI oral exams

Claude Code creator shares his setup for using Claude Code

Boris Cherny, who created Claude Code, runs multiple instances at a time with a focus on Opus 4.5 with “thinking.” It needs less steering despite being slower per token, which increases velocity in the long run. He also claimed that Claude Code’s updates are all written entirely by Claude Code itself.

Separately, a principal engineer at Google mentioned just how far Claude Code has come by saying it can now design specs that took multiple engineers a few months ago. An ex-Google PM commented on this explaining how important it is for engineering teams to be using competitors’ products to improve their own.

My only addition: stop thinking of Claude Code, Gemini CLI, and Codex as coding agents. Instead, think of them as terminal agents. Anything you can do from the terminal, it’s possible to get AI to do for you.

Source: Boris Cherny on X, Jaana Dogan on X, Raiza Martin on X

Research to watch in 2026: Recursive Language Models and Manifold-Constrained Hyper-Connections

Recursive Language Models (RLMs) let models handle context windows up to 100x longer than their native limits by breaking inputs into chunks and processing them programmatically. In tests scaling from 8K to 1M tokens, base models degraded sharply while RLMs maintained performance at comparable cost.

Separately, a technique called Manifold-Constrained Hyper-Connections (mHC) stabilizes model training with only 6.7% overhead, eliminating common instability issues that plague large model runs.

Both papers tackle fundamental scaling bottlenecks: RLMs at inference time and mHC at training time. If these techniques hold up, they could meaningfully change how we build and deploy large models.

Source: Alex Zhang on RLMs, mHC paper on arXiv

NVIDIA acquihires Groq through licensing deal

Groq signed a licensing deal with NVIDIA that will see about 90% of Groq’s 400+ employees move to NVIDIA at a $20B valuation. Groq will remain independent and GroqCloud will continue operating. Groq’s specialty is developing compute with incredibly low-latency inference, something Nvidia can benefit from as it continues to ramp up its research and development of AI compute.

This is another acquihire within the AI industry. The most recent I can think of was Google acquiring talent from Windsurf which led to Google’s Antigravity IDE. I see something similar happening at Nvidia where they’ll come out with even lower latency compute offerings for customers.

Source: The Chip Letter by Babbage

More...

A shape-shifting molecule discovery could change the future of AI hardware. (Science Daily on shape-shifting molecules)

Micron shares surged over 10% on AI optimism and increased demand for high-performance memory. (Micron stock coverage)

California State Senator introduced a four-year moratorium to ban AI chatbot-equipped toys for minors. (Coverage of AI toy moratorium)

Claude Code can run on-the-go using an iPhone via Termius and mosh to a VM costing about $7/day. (Granda.org)

Advanced AI could collapse labor’s share of GDP toward zero, concentrating wealth among capital holders. (Dwarkesh Patel on X)

An excellent overview on the past 10 years of AI. (Weighty Thoughts by James Wang)

An interesting read from an author who canceled their technical book publishing deal for various reasons. (Austin Henley)

PostgreSQL dominated 2025, driving major acquisitions and new DBaaS launches across all major cloud vendors. (Databases in 2025: A Year in Review)

Two excellent 2025 retrospectives worth reading. (Ignorance.ai on 10 AI stories by Charlie Guo, Simon Willison on the year in LLMs)

Last week

In case you missed it, here’s last week’s overview:

AI for Software Engineers: Looking Forward to 2026

Happy New Year! Thank you all for your support in 2025! 2026 will be an even better year for AI for Software Engineers! Here’s a recap of the year, what to look forward to in 2026, and a few questions to help me improve the newsletter. 😊

I’ve removed the jobs and industry updates from these weekly roundups. I haven’t been able to fit them properly at this cadence and will be moving them to their own, less frequent articles. Stay tuned!

Thanks for reading!

Always be (machine) learning,

Logan