AI’s Economic Impact Is Real | AI for Software Engineers 78

This week: AI means more software engineers, young adults trust AI with financial decisions, tips on agent safety, and more

I’ve seen a lot of articles recently claiming AI has had near zero economic impact despite making up a large portion of economic spending. In reality, AI is starting to show economic impact. It just takes time to see because productivity gains are a second-order metric that won’t show immediately.

However, AI’s economic impact will compound over time because:

“Productivity helps define how fast the economy can grow without inflation. This is because taking away population growth and exports, what your economy can sustain is defined by how efficiently you can build stuff.” — James Wang in Weighty Thoughts

Anthropic just released their Economic Index to understand Claude’s impact on work productivity beyond simple tasks. They analyzed over two million conversations (web app and API), categorizing each by task complexity, skill requirements, purpose, autonomy level, and success rate.

A few caveats before the findings.

First, Anthropic uses Claude asking a standard set of questions to fit conversations into the categories above. This isn’t foolproof since LLM output is non-deterministic, meaning some classifications will be wrong due to hallucination, bias, or other factors.

Second, this doesn’t invalidate Anthropic’s findings. At two million conversations, individual classification errors become statistical noise. The aggregate patterns remain meaningful even if some classifications are off. As an LLM provider, Anthropic has access to data third-party reports wouldn’t.

Third, Anthropic only has access to Claude data. This is Claude-centric rather than industry-wide, though I’d bet findings across major LLM providers would be similar.

The main takeaways:

Complex work benefits more than simple work. Tasks requiring college-level skills see 12x speedups. High school-level tasks see 9x. A common argument against AI is that it can only handle simple tasks. This data suggests otherwise.

People are working with AI, not being replaced by it. Augmentation (52% of usage) now leads automation (45%), reversing the trend from earlier in 2025.

AI adoption is accelerating fast. Task coverage across occupations grew from 36% in January to 49% by November, nearly doubling in 10 months.

Reliability depends on task complexity. API tasks hit 50% success rate at around 3.5 hours of work. Claude.ai tasks hit the same threshold at 19 hours. The harder the task, the longer before reliability drops.

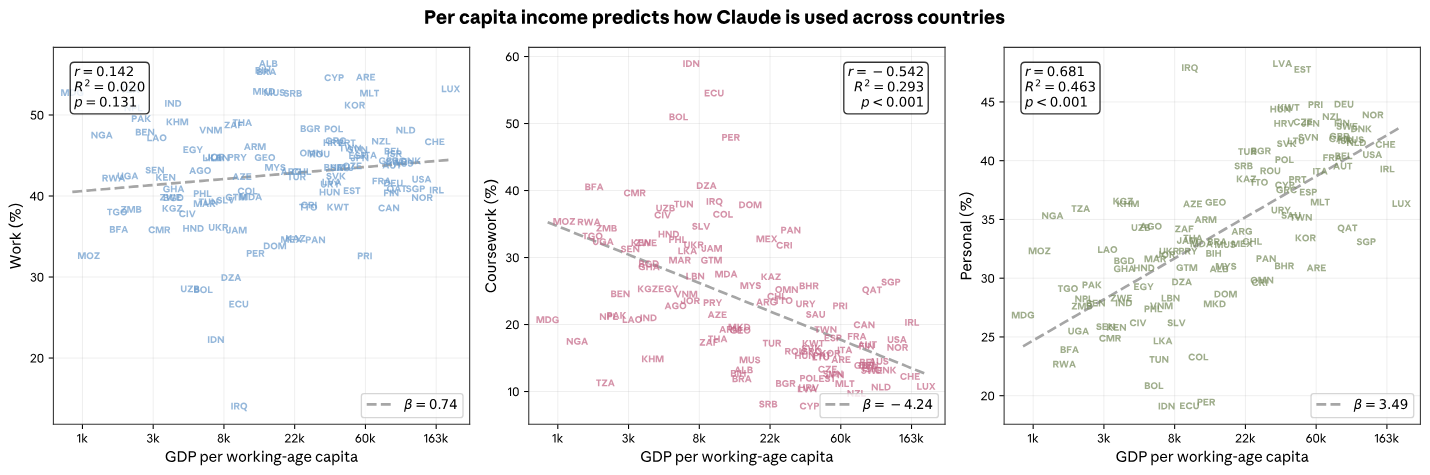

Usage patterns reveal economic divides. Higher GDP countries use Claude for work and personal tasks. Lower GDP countries use it primarily for education.

The final bullet is particularly interesting (see chart):

“In countries with higher GDP per capita, Claude is used much more frequently for work or for personal use—whereas countries at the other end of the spectrum are more likely to use it for educational coursework.”

At first glance, this suggests AI is widening the production gap between high- and low-GDP countries since high-GDP countries use Claude to get work done more effectively.

After further thought, AI may be providing low-GDP countries with educational resources they wouldn’t otherwise have. This could actually lessen the production gap over time by enabling economic growth via a more educated populace.

Let me know what you think in the comments. I’m especially curious if you disagree. Enjoy the rest of this week’s edition! Later this week, I’ll be updating my ML roadmap with more AI engineering resources, so make sure to check it out!

My Picks

How to write a good spec for AI agents by Addy Osmani

A practical framework for writing specs that actually work with AI coding tools. Plan first in read-only mode, let the agent expand the brief into a structured SPEC.md, then break work into small testable tasks. It covers the six core areas every spec needs (commands, testing, structure, style, git workflow, boundaries) and how to use architect/overview agents to maintain consistency.

Slop is everywhere for those with eyes to see

“The algorithm has flattened curiosity by eliminating the need to hunt for our content.” — Joan Westenberg

The biggest takeaway from this: The shift from curation to algorithmic delivery flattens curiosity and pressures teams to optimize metrics at the cost of quality. As we resort to feeds to give us content, feed providers will resort to AI to make creating content easier or purely to supplement the lack of human creators versus consumers on a platform. This is why “AI Slop” is so prominent online. Feeds have caused us to lose our sense of curiosity and the work we used to put in to grow it.

The AI Manager’s Schedule by Charlie Guo

AI coding tools now handle more task types with longer coherence, shifting the question from “can AI do this?” to “should I?” Management now happens in 5-15 minute intervals that require new skills: crisp written architectures, slicing work into AI-sized chunks, and knowing when to override. Also explores the cognitive costs of agent orchestration and the risks of losing low-level understanding.

GPU Performance Engineering Resources

I would guess ~50% of AI-related engineering job listings I read require something to do with compute resource optimization. If you want to work as an engineer in AI, this is a great topic to learn. This resource is a curriculum for learning GPU performance engineering and will be added to the roadmap very soon.

Claude Cowork’s file exfiltration flaw exposes agent security challenges

Security researchers at PromptArmor discovered an unresolved isolation flaw in Claude Cowork that allows indirect prompt injections to exfiltrate files. When a user opens a maliciously crafted document, injected instructions can cause Claude to upload local files to an attacker-controlled Anthropic account using the platform’s allowlisted API with no human approval required. The attack works across multiple Claude models (Haiku, Opus 4.5) and can also trigger DoS vectors through file type mismatches.

This is yet another example of why agent security is so difficult (see our coverage of Antigravity’s vulnerabilities). As an engineer, you have to realize anything within an LLM’s context can be used within any of these tools they’re given access to. I’ve got an article coming out about this soon.

Source: PromptArmor on Claude Cowork exfiltration

LangChain CEO on building agent memory and observability

Harrison Chase (the CEO of LangChain) shared multiple blog posts about AI agents in software engineering, all of which should be paid attention to if you’re planning to build agents yourself.

First, he mentioned traces as documentation for understanding what agents are doing. This was included in last week’s edition, but it’s worth mentioning here, too. Agent logic isn’t stored in code, but in the LLM’s traces. These traces must be used as the equivalent to test cases to ensure agent functionality is correct. Using traces is much more difficult than writing test cases and I suggest reading his entire post to get the full understanding.

Second, he shared how LangChain has set up their Agent Builder’s memory system. Context/memory is another fundamental agent performance task. Understanding how to maintain agent information so it can (and can’t!) do certain things is key to ensuring their proper function. A great example of forgetting is the Ralph Wiggum protocol we discussed last week.

Lastly, Harrison shared an article about the release of LangChain’s Insights Agent. This is an agent that checks traces for you to understand how users use your agents. It uses a clustering algorithm to group similar traces and, therefore, similar actions. I’ve been saying for a while that some sort of anomaly detection system to determine deviant agent behavior would be great for observability, but it’s possible this clustering approach is the real answer we’re looking for.

Source: LangSmith Agent Builder memory system, LangSmith Insights Agent, Harrison Chase on traces as documentation

xAI employee ousted after leaking “human emulator” roadmap

A former xAI employee publicly disclosed an internal roadmap revealing development of a “human emulator” aimed at automating a wide range of human tasks. They revealed this on a podcast (apparently) without company consent and were removed from their position immediately.

Two things to take away from this:

Don’t go on a podcast and share internal secrets. Definitely don’t go on a podcast and reveal internal secrets while saying something along the lines of “I shouldn’t be sharing this”.

Human emulation shouldn’t be a surprise to anyone. All physical intelligence companies are trying to create physical intelligence in a humanoid form factor because humans are the interface for all work we do. If a human can do it, it can be done. If an AI can emulate a human, it can do what the human can do. It’s similar to self-driving cars. There are definitely better automated transportation setups, but cars are now the standard for transportation so their form factor is what’s being automated.

Source: xAI human emulator leak

AI means more software engineers, not fewer

We’ve been trying to replace software engineers for decades. COBOL tried to let business workers write their own code. Visual Basic made Windows apps easier. No-code tools promised the same thing. AI is the latest chapter because it’s exceptionally good at translating plain English into reliable code.

The problem is that software engineering sounds simple when described in plain language but is inherently complex. Effective software requires domain understanding and capable judgment, not just code generation (see our article about software engineering being about problem solving, not writing code).

In fact, the entire history of software engineering has been about creating different levels of abstraction to simplify complex pieces of the job. AI is one of these abstractions (and a very effective one at that!).

Every time we create new abstractions and software becomes easier to build, we end up building exponentially more of it. Addy Osmani calls this the Efficiency Paradox. We don’t run out of ideas or software that needs to be built. Instead, we’re economically enabled to produce greater output.

With regard to AI’s abstraction, Osmani wrote:

“The real question is whether we’re prepared for a world where the bottleneck shifts from “can we build this?” to “should we build this?”“

Not only does AI as a technology mean we can build greater, more capable software, AI as a development tool enables doing so at an unprecedented rate. Once we begin building exponentially more software, we need more software engineers to build and maintain this code.

Source: The recurring dream of replacing developers, The Efficiency Paradox, Grady Booch on abstraction

Product-minded engineering means getting error design right

Gergely Orosz published a deep dive on why good error and warning design is high-leverage work. Diagnostics are often the primary interface users encounter, so errors must be raised at the API/UI boundary, validated upfront, and surfaced early.

Engineers should categorize errors for human vs. programmer consumers, choose clear error classes and metadata, and provide contextual, actionable messages including suggestions. Error messages are often the most-seen part of your product’s interface, yet engineers treat them as an afterthought. The best product-minded engineers recognize that a confusing error is costly (support tickets, user frustration, lost trust, etc.). Investing in clear, actionable error design pays compounding dividends.

We’ve recently discussed the importance of being a product-minded engineer to succeed in the AI era. Error handling is an important way to do that.

As an aside: The Pragmatic Engineer is also hiring a part-time remote Tech Industry Analyst to research engineering trends and produce in-depth subscriber reports. The pay is incredibly high (~$175/hr) so it’s probably worth taking a look at.

Source: The Product-Minded Engineer on errors and warnings, Tech Industry Analyst role

Young adults are trusting AI with financial decisions

Cleo AI surveyed 5,000 UK adults aged 28-40 and found strong interest in AI-driven money management: 64% would trust AI with disposable income decisions, 54% to move money to avoid overdrafts, and 52% to manage bills. This comes alongside weak financial confidence, with 37% reporting poor self-discipline and 80% wanting to improve their financial knowledge.

Last week, we discussed how people are increasingly turning to AI for healthcare advice. Now we’re seeing the same pattern with personal finance. These are high-stakes domains where bad advice can cause real harm, yet users are willing to delegate decisions to AI anyway. The common thread is accessibility: AI is available 24/7, doesn’t judge, and provides immediate answers. Trust remains a gating factor though (as we’ve discussed previously), with 23% saying they want incremental proof before wider use.

Source: Cleo AI survey on financial trust

Quickies

Google.org is providing $2M to Sundance Institute to train 100,000+ artists in AI filmmaking skills with free curricula and scholarships. src

SAP and Fresenius are building a sovereign AI platform for healthcare with a mid three-digit million euro investment using on-premise-ready models that preserve data sovereignty. src

Tesla’s AI5 chip design is nearly finished with AI6 in early stages, targeting a 9-month design cycle for continuous generations of custom AI accelerators. src

PJM projects 4.8% annual electricity demand growth from AI data centers, with consultants forecasting a 25% rise by 2030 and real risk of East Coast rolling blackouts. src

ChatGPT Go launched worldwide at $8/month with 10x more messages than free tier, while OpenAI will test ads in free and Go tiers. src

AstraZeneca acquired Modella AI to embed pathology-focused foundation models directly into oncology R&D for faster biomarker discovery. src

Apple is fighting for TSMC capacity as Nvidia likely overtook Apple as a top customer, forcing Apple to compete for leading-edge wafer slots. src

Veo 3.1 adds native 9:16 vertical output for mobile-first short-form video and state-of-the-art upscaling to 1080p and 4K. src

Kaggle launched Community Benchmarks for reproducible multi-step reasoning, code execution, and tool use evaluations across models. src

OpenAI published a response to Elon Musk’s lawsuit, claiming Musk wanted absolute control and proposed merging OpenAI into Tesla before leaving. src

Palantir’s ELITE tool maps deportation targets for ICE with address confidence scores, ingesting government and commercial data for raid prioritization. src

Coding on paper as a deliberate training method forces engineers to slow down and master fundamentals rather than outsourcing cognition to tools. src

Last week

In case you missed it, here’s last week’s overview:

AI Can Do Your Job - Now What? | AI for Software Engineers 77

Two releases this week show how far AI coding tools have come. Claude 4.5 Opus is now more accessible with higher rate limits, and Claude Code has improved its planning capabilities, spending more time on design and less on iteration and enabling enough tokens for developers to use it full-time.

Thanks for reading!

Always be (machine) learning,

Logan

The Grady Booch point about abstraction layers really nails it. Software has always been about creating better interfaces between humans and machines and AI is just the latest iteration of that design pattern. I remember when visual basic was the big shift that was suposed to democratize coding and now we're saying the same about AI agents. The cycle is pretty fasinating when you think about it.

So, students is developing countries are using AI to cheat more, what will lead to an increase in the gap in human capita between the developing and developed worlds, condemning the low income countries to stay as they are.